AI and Human-Computer Symbiosis

Imagining a world with hyper-personalisation and extreme delegation to AI agents.

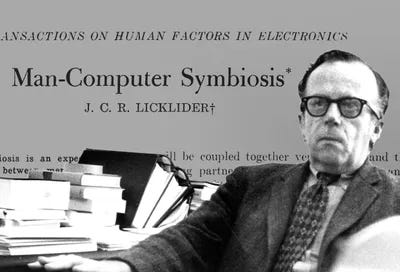

In 1960, J.C.R. Licklider, a man widely considered to be one of the fathers of modern computing, famously authored the paper Man-Computer Symbiosis. In it, he painted an illustrative picture of the way humans and computers would interact with each other. At the time, many considered it to be a grand illusion.

More than 60 years later, LLMs have exploded onto the scene and many of his visions don’t seem too far-fetched. In this article, I’ll share some very rough hypotheses on what this may look like.

The Fig Tree

Licklider provides an eloquent analogy: “The fig tree is pollinated only by the insect Blastophaga grossorun. The larva of the insect lives in the ovary of the fig tree, and there it gets its food. The tree and the insect are thus heavily interdependent: the tree cannot reproduce without the insect; the insect cannot eat without the tree; together, they constitute not only a viable but a productive and thriving partnership. This cooperative ‘living together in intimate association, or even close union, of two dissimilar organisms’ is called symbiosis.”

His hope was that humans and computers would be coupled together very tightly, with the resulting partnership thinking “in a way no human brain has ever thought and processing data in a way not approached by the information-handling machines we know today”.

Where Are We Now?

Anyone who’s experienced the magic of LLMs will agree that we’ve levelled up in mankind’s inevitable march towards symbiosis. But what might this symbiosis look like in 10 years? Here are two somewhat opposed concepts that I’ve been thinking about: extreme delegation to AI agents, and hyper-personalisation. [1]

Extreme Delegation to AI Agents

Imagine a world where we interact with software tools and each other via our own AI agents. If I need to create a presentation with my team, our AI agents collaborate by going into Gmail and Notion to understand all relevant context, and then spin something up in Canva. Then they report back to us with a list of questions, find us a time to meet, create an agenda, and we respond to their questions to drive the next iteration of the presentation.

Or, if we’re a lean startup developing a new feature, our AI agents across engineering, marketing and operations come together to build a roadmap. Along the way, they ask us questions and use this feedback until they present a holistic plan. Then they go and autonomously build the feature.

This might sound far-fetched, but with tools like AutoGPT and Rewind, AI agents will be able to understand all the necessary context and execute tasks by breaking them into sub-tasks. Furthermore, these agents will be able to collaborate, mostly without a human in the loop. This brings to mind three implications:

Trust as the Barrier: Currently, AI tools can do many forms of research and synthesis, but they don’t save us much time because we don’t trust the end result. Reviewing the output from an AI tool is marginally quicker than just doing it yourself. This is especially true for fields where the tolerance for faulty work is low (like healthcare or financial services). For anyone who’s ever had an internship, you might have experienced this from the vantage point of an AI tool. No one is eager to give you work because it’s going to take time to train you, and even longer to double-check your work. The only way to prove your worth is to repeatedly produce high-quality work. A similar effect will play out with AI, whereby we only trust our agents until they prove to be worthy members of the team.

Knowledge Work is Just Decision Making: In his paper, Licklikder estimated that most of his “time went into finding or obtaining information, rather than digesting it”. I suspect that most knowledge workers (even today) feel the same. As we trust AI to do the obtaining and synthesizing for us, the scope of knowledge work will continuously decrease until we are effectively just decision-makers. Humans make the decisions, our AI agents execute on them. Those who possess the double whammy of being able to make high-velocity and high-quality decisions will do the best. This is particularly applicable to my own profession, as access to investments and quality of information synthesis increasingly become a level playing field. The alpha in VC will come from making good decisions quickly.

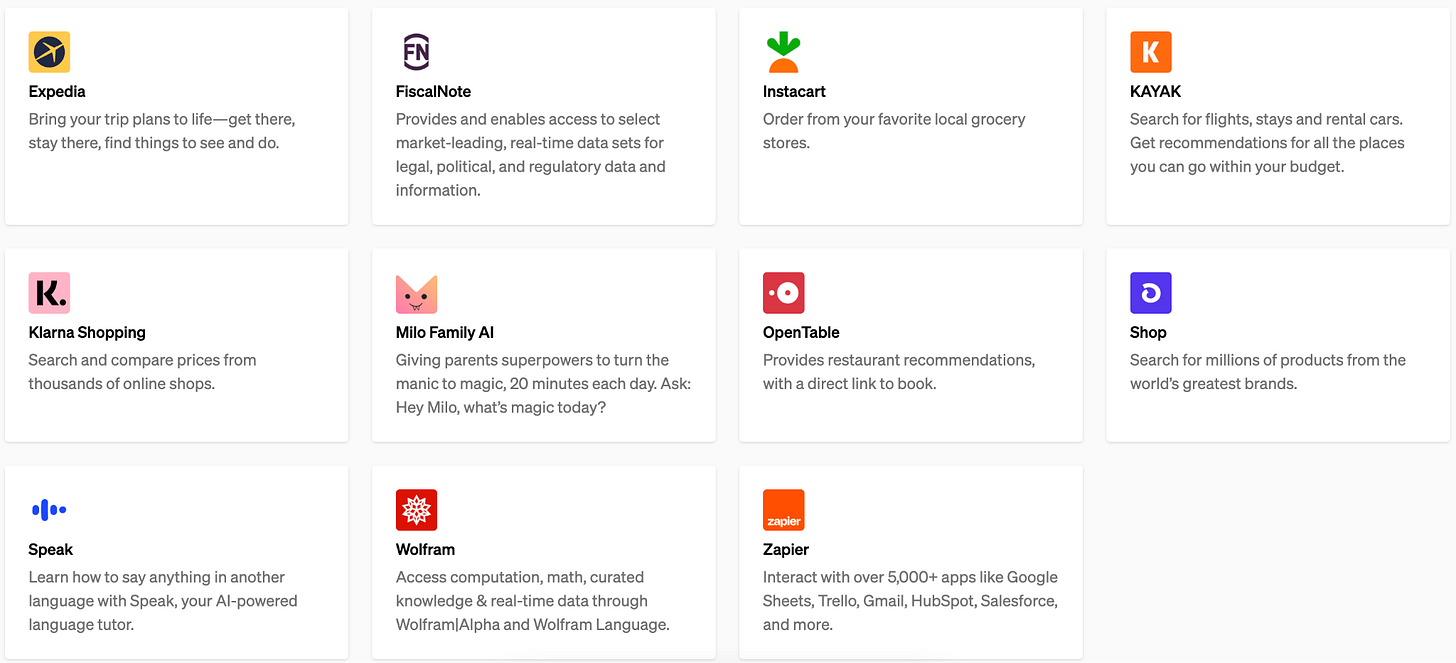

Software is Abstracted Away: If we’re all just guiding our AI agents, human interaction with certain kinds of software declines to nil. Instead of going into Figma and XCode 10 times a day, my agent will do all the execution work in these platforms, and just provide me with the output. The functionality provided by various softwares will still be valuable, we just may not engage with their frontends as we do now. ChatGPT’s plugins are reminiscent of this, where we can now shop, make reservations and plan travel largely through a natural language interface. Longer term, it’s a possibility that these tools are just absorbed by a general-purpose AI.

Choose Your Own Adventure

If that all sounds pretty grim, let me paint another picture.

A popular narrative today is that with AI, we will be able to create anything we want – whether it be a novel, a TV show, or even software. I find this logic fascinating because whilst humans inherently have the desire to be creative, we are also inherently lazy.

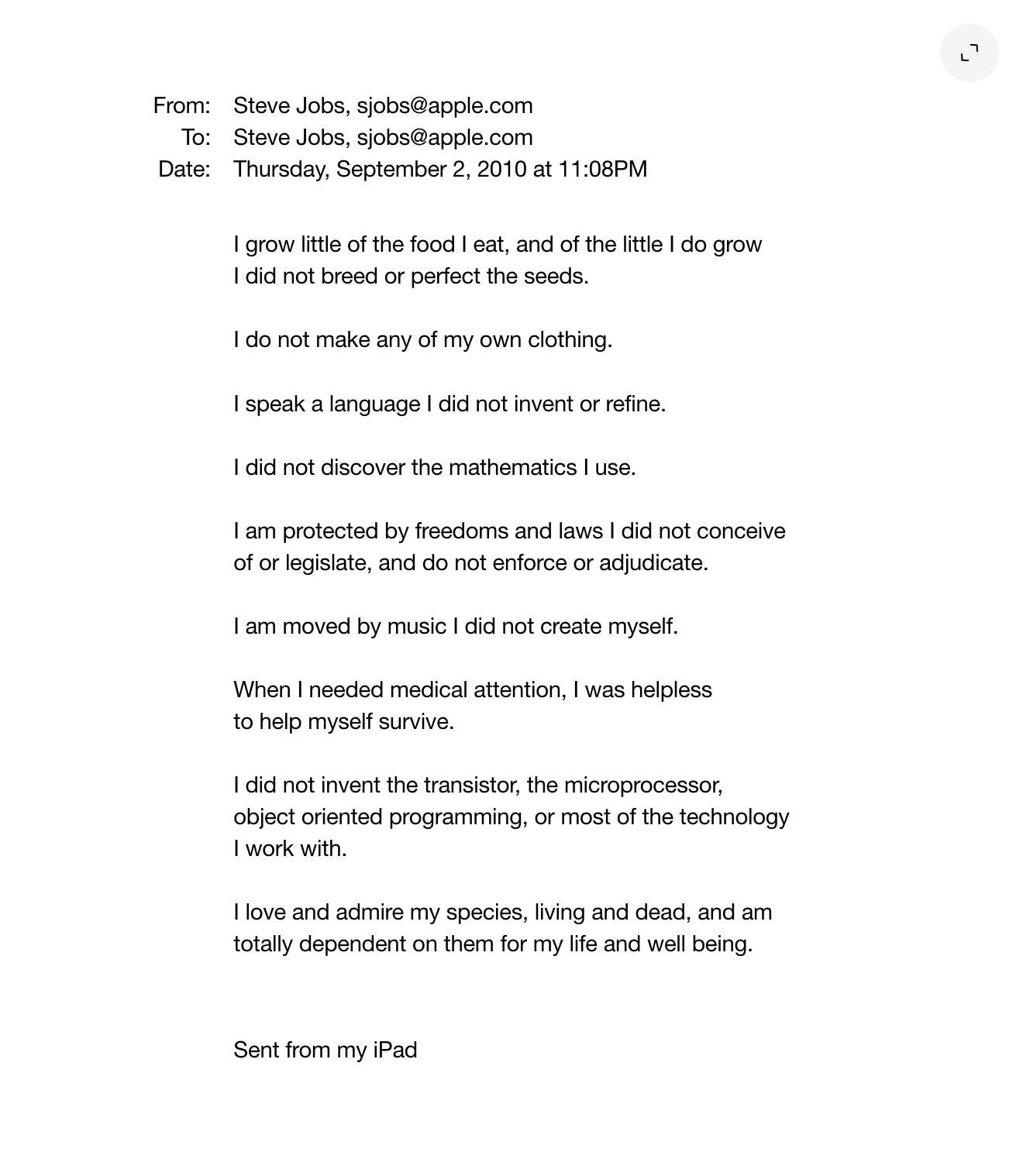

We might experiment every now and then with using AI to create our own music or movies, but for the most part, we will rely on the ingenuity of others. We’re fundamentally dependent on the creativity of others. If you’re not convinced of this, the email below by Steve Jobs explains this far more eloquently than I can.

Despite us having the power to be more creative than ever before, we’ll still be highly dependent on the creativity of others. Even if I can create my own tools with AI, I’d still rather use products or consume content created by people who are far more talented than me.

This is where I think we enter a world of hyper-personalisation. Many years ago, personalisation just meant marketers sending mass emails with “Dear Sid”, rather than “Dear Sir or Madam”. Nowadays, we have highly targeted advertising, and companies develop their products to hold our attention. But we all still use the same things – my version of iOS is the same as yours, as is my Gmail and my Slack. We might customise elements of the software differently and the content we’re fed will certainly be different, but the actual building blocks are the same.

I’m excited by a world where companies take “co-creating with their customers” to a whole new level. If you think about it, the fact that everyone is fed the same version of an operating system, doesn’t make any sense. We all have different preferences and interact with software differently. So why shouldn’t our operating systems learn from these interactions and self-customise to suit our preferences? The same is true of many software products, and even hardware.

The technical challenges of achieving this are immense, but nonetheless, it’s fun to imagine a world where our symbiosis with AI results in us each having highly customised tools that learn from our preferences and feedback.

Having said all of this, our symbiosis with technology most likely looks like something I can’t even imagine. The point has been made that AI breaks down the barrier of what an application is. Today, an app or software tool is sort of a packaged container that helps us with certain tasks. AI agents and extreme co-creation both create a blurred line, as they have the ability to perform tasks across disparate domains. If this proves to be true, we may move away from single-purpose apps, to an “OS-level” assistant.

Either way, one thing is clear – our interactions with technology are going to shift significantly in the next decade. New business models will emerge, and I can’t wait to back the ambitious founders building them.

[1] These are fairly unbaked ideas with many holes to be poked.